Table of Contents

AI is set to revolutionize our work practices, offering a significant competitive advantage to early adopters. While numerous companies have already harnessed AI for automated chatbot applications to handle customer inquiries, some are yet to familiarize themselves with this emergent technology. A key question arises: how effective is AI in these applications?

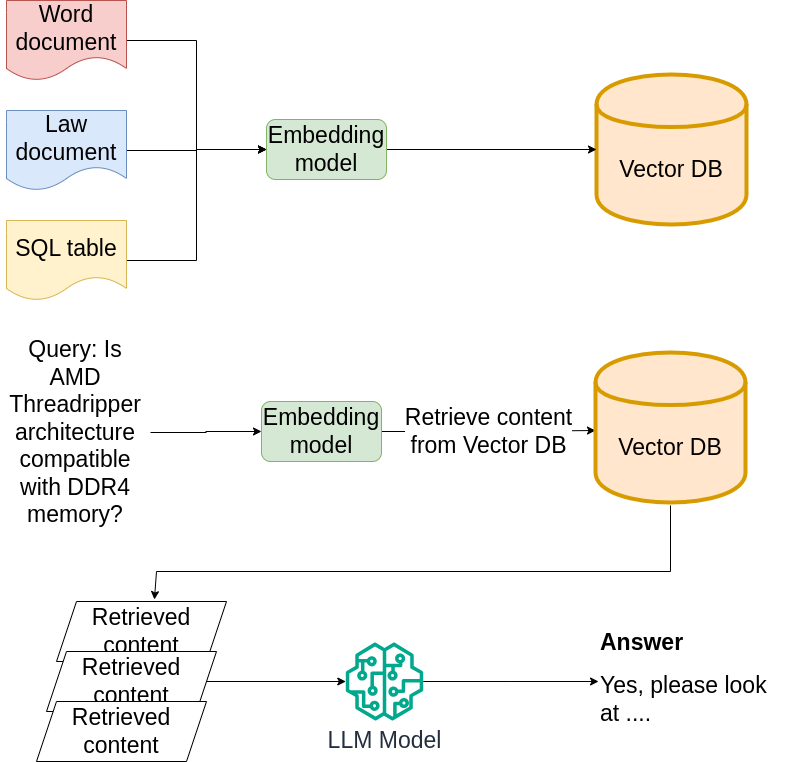

One challenge with current open LLMs like ChatGPT or Google’s models is their limited access to real-time data, with exceptions like the Bing plugin. However, even with this plugin, extracting data from one’s own website directly remains a hurdle. Overcoming this limitation without resorting to costly fine-tuning of the model poses a significant challenge. RAG to the rescue!

Integrating AI with Retrieval-augmented generation

Another transformative technology in the integration of AI is the Retrieval-Augmented Generation (RAG). RAG combines the power of language models like GPT with external knowledge sources, enabling the AI to retrieve and utilize factual information from a database or a corpus of documents. This approach is particularly beneficial in AI applications for several reasons:

- Enhanced Accuracy and Relevance: RAG allows chatbots to access a vast range of information, ensuring that the responses are not just contextually relevant but also factually accurate. This is especially useful for technical support queries that require specific, detailed information.

- Dynamic Knowledge Base: Unlike traditional AI models that rely on pre-trained knowledge, RAG-equipped chatbots can pull the latest data from external sources. This means they can provide up-to-date information on products, services, and policies, adapting to changes without needing retraining.

- Personalized Customer Experience: RAG can tailor responses based on customer history or preferences by accessing customer data (with proper privacy safeguards). This personalization enhances customer satisfaction as the responses are more aligned with individual needs and past interactions.

- Cost-Effective Updating: Keeping an AI model’s knowledge base current is challenging, especially for rapidly evolving industries. RAG addresses this by accessing external, up-to-date databases, reducing the need for frequent retraining of the model.

- Scalability and Versatility: RAG can be applied to various customer support scenarios, from simple FAQ-based interactions to complex problem-solving tasks. Its ability to scale and adapt to different types of queries makes it a versatile tool in the customer support arsenal.

The Rise of Autonomous Agents is key to integrating AI

The integration of autonomous agents in AI applications can dramatically enhance the quality of interaction. They are capable of handling complex queries, providing personalized responses, and even learning from each interaction to improve over time. Moreover, their ability to reason and think autonomously reduces the dependency on human intervention, thereby scaling the customer support capabilities of a business.

As AI continues to evolve, these autonomous agents are set to become more sophisticated, offering unprecedented levels of support.

A significant leap in AI is the advent of autonomous agents, exemplified by systems like the Langchain agent. These agents represent a more advanced form of AI interaction, where they don’t just respond to queries but actively engage in a multi-step reasoning process. This process typically involves several stages:

- Question Understanding: The agent starts by comprehensively understanding the customer’s query. This involves parsing the question for key information and context.

- Thought Process: Unlike traditional chatbots, an autonomous agent engages in an internal thought process. It considers various aspects of the query, drawing on its training and any available data. This process might involve breaking down the question into sub-questions, hypothesizing different scenarios, or retrieving relevant information.

- Observation: The agent then actively seeks out additional information if needed. This could be from an external knowledge base, similar to the RAG model, or through internal logic and reasoning systems. This step is crucial for queries that require up-to-date information or for those that are complex and multifaceted.

- Thought Synthesis: After gathering all necessary information, the agent synthesizes its thoughts. It combines the initial understanding of the query with new insights gained during the observation phase. This synthesis is where the agent forms a coherent response strategy.

- Final Answer: Finally, the agent delivers its response. The answer is not just based on pre-defined scripts but is a result of the agent’s ‘thinking’ process, making it more tailored and accurate to the user’s query.

Case examples of integrating AI

Examining real-world applications offers one of the best approaches to understanding the practical impact and capabilities of AI technologies. The case studies we’ve explored—from customer support to financial analysis, and legal assistance—illustrate the dynamic and versatile nature of AI in various sectors. These examples not only demonstrate AI’s potential to enhance efficiency and accuracy but also highlight how it can be tailored to meet specific industry needs

Customer support

Scenario: A retail company integrates an AI-driven chatbot into its website and mobile app to handle customer inquiries.

Implementation: The chatbot, powered by a GPT-like model, is trained to understand and respond to a wide range of customer queries, from tracking orders to handling returns and exchanges. The AI can engage in natural, conversational interactions, providing instant responses 24/7.

Technologies Used:

- Large language models for natural language understanding and generation.

- Integration APIs to connect the chatbot with the company’s customer service database and order management system.

Data Analysis and Reporting

Scenario: A financial services firm employs AI for analyzing market trends and generating comprehensive reports.

Implementation: The AI system is fed large volumes of financial data, including market trends, historical stock performance, and economic indicators. It analyzes this data to identify patterns and generate predictive models. The system then produces detailed reports with insights into market behaviors, risk assessments, and investment opportunities.

Technologies Used:

- Advanced data analytics tools and algorithms for pattern recognition and predictive modeling.

- Large language models for generating coherent, detailed, and understandable reports.

- Data visualization tools integrated with AI to present findings in an accessible format for decision-makers.

Integrating AI in Legal Sector as Law assistant

Scenario: A legal firm implements an AI-driven law assistant to aid lawyers in research and case preparation.

Implementation Details:

- Legal Research: The AI assistant is designed to quickly sift through vast legal databases and retrieve relevant statutes, case laws, and legal precedents. It understands natural language queries from lawyers, allowing them to ask questions in plain English and receive precise legal references in return.

- Case Analysis: Based on the inputs provided (like case facts, relevant jurisdiction, etc.), the AI tool analyzes previous cases with similar circumstances, providing insights into how courts have ruled in the past and identifying key legal arguments that proved effective.

- Document Drafting: The AI assistant helps in drafting legal documents such as briefs, contracts, and legal notices. It suggests language that has been successful in similar cases and ensures compliance with the current legal standards and formats.

- Client Interaction: The AI tool can provide initial legal consultation based on clients’ queries. It can handle routine legal questions, offering quick guidance and freeing up lawyers’ time for more complex tasks.

Technologies Used:

- Natural Language Processing (NLP) for understanding and responding to legal queries and for document drafting.

- Legal databases integration to access up-to-date statutes, case laws, and legal articles.

Investigating the cost/benefit ratio

Many small and medium-sized businesses are eager to embrace the generative AI wave, yet they often struggle to effectively assess its cost-benefit implications. Presently, companies face two primary options: leveraging paid cloud services such as OpenAI’s ChatGPT, Google’s VertexAI, or alternatives offered by AWS, or opting for open-source tools like Mistral AI 7B or LLaMAv2, which are currently available for commercial use at no cost. However, choosing open-source models requires considerations regarding hosting and possibly the need for significant GPU server resources, especially for model fine-tuning.

OpenAI states the following:

- We do not train on your data from ChatGPT Enterprise or our API Platform

- You own your inputs and outputs (where allowed by law)

- You control how long your data is retained (ChatGPT Enterprise)

The question then arises: how can these claims be verified? Many companies might be hesitant to jump on that train.

How can a business that holds private, sensitive data transform with AI?

Global companies may face challenges in adhering to GDPR and other privacy regulations while sharing sensitive data, like personal information, with AI service providers such as Google, Amazon, or OpenAI. There’s a risk that this data could inadvertently be included in training datasets or lead to potential leaks. One viable option for these companies is to use custom LLMs either trained from scratch on their data or finetuned from public models like Facebook’s LLaMa v2 and Mistral.

Running the inference on own hardware/cloud hardware

Alternatively, businesses can run open-source and free AI models using custom hardware setups on-premises or opt for cloud computing solutions. Cloud platforms offer advantages such as scalability and cost-efficiency, particularly for intermittent computing needs (see Lambda/Step function in AWS). If we take into consideration models like LLamaV2, with quantized weights. One can find cloud GPUs on pages like vast.ai A100 for as low as 1$ per hour. According to NVIDIAs page a A100 GPU can produce output as fast as 11 sentences per second, quite fascinating!

What is the cost of GPT providers?

Understanding the cost of integrating AI, particularly Large Language Models like GPT offered by OpenAI, is crucial for businesses and software engineers. Here’s a concise breakdown of the pricing.

OpenAI GPT-4 Pricing

OpenAI’s GPT-4 model implements a token-based billing system. A ‘token’ in this context refers to a piece of text, roughly equivalent to four bytes. The pricing for GPT-4 is structured as follows:

Google Vertex AI pricing

Google Vertex AI, on the other hand, uses a character-based model for its AI services, including pricing for AI models like Gemini. This model is calculated per 1,000 characters for both input and output, with rates varying depending on the services and models used.

| Model/Price | 1000 input | 1000 output |

| OpenAI GPT 4.0 8k context | 0.03$ per 1000 tokens | 0.06$ per 1000 tokens |

| OpenAI GPT 4.0 32k context | 0.06$ per 1000 tokens | 0.12$ per 1000 tokens |

| OpenAI GPT-3.5 Turbo 4k context | 0.0015$ per 1000 tokens | 0.002$ per 1000 tokens |

| OpenAI GPT-3.5 Turbo 16k context | 0.003$ per 1000 tokens | 0.004$ per 1000 tokens |

| Google PALM 2 for Text | 0.0005$ per 1000 characters | 0.0005$ per 1000 characters |

| Google PALM 2 for Chat | 0.0005$ per 1000 characters | 0.0005$ per 1000 characters |

Conclusion and Future Outlook

As we delve into the AI integration across various sectors, from customer support to legal and financial services, it’s clear that this technology holds transformative potential. The balance between leveraging advanced AI capabilities and ensuring data privacy, especially in compliance with regulations like GDPR, remains a critical consideration. The adoption of AI, whether through paid services or open-source platforms, necessitates a careful evaluation of cost, efficiency, and data security.

Looking forward, the landscape of AI integration in business is poised for rapid evolution. The trend towards customizing LLMs for specific industry needs, coupled with advancements in ensuring data privacy, is likely to accelerate. Moreover, the shift towards more cost-effective AI solutions, such as the utilization of cloud or on-premise hardware for running AI models, reflects a growing democratization of AI technology.

As AI continues to mature, we can expect to see more sophisticated applications emerging. These will not only enhance operational efficiencies but also drive innovation in areas like predictive analytics, personalized customer experiences, and automated decision-making processes. The future of AI in business, therefore, promises not only enhanced efficiency and scalability but also new avenues for strategic growth and competitive advantage.

In conclusion, businesses venturing into AI adoption must navigate the intricate balance between harnessing the power of AI and safeguarding data privacy. This journey, though complex, opens up a realm of possibilities for innovation and growth in the digital era.

We have already detailed the future outlook of medical equipment and AI in the previous post and cardiac healthcare here.

In case you have any questions or are interested in giving it a shot, feel free to leave a comment below. For more personalized inquiries or to get started, drop me an email at [email protected].

Sir, amazing work. Keep the momentum